DEFINING THE AI PROBLEM

Experts are arguing that Artificial Intelligence presents a much greater existential threat to humankind than the nuclear bomb. We are creating a superior race faster than we are creating ways to control it. They are alarmed because they don’t see government putting the thought and energy into controlling AI code the way we have always maintained control of nuclear armaments and launching capacity.

By contrast, there are those who rejoice at the thought of us creating a superior race. (I’ll pass up the obvious Hitler reference here.)

Transhumanists are technology philosophers who envision a future in which humans are able to overcome biological limitations such as aging, disease, and death, as well as intellectual and physical limitations, through the use of technology. They believe that emerging technologies such as artificial intelligence, genetic engineering, and nanotechnology can be harnessed to enhance human cognition, overall wellbeing, and longevity–even to the point of potentially eliminating death. They see social improvements such as the fact that humans won’t have to work for a living because machines will do all of that for us. Already, the government of Canada is about to establish a legislative committee to explore enactment of a universal basic income.

If you are telling yourself this is all science fiction, Hollywood imagination, etc., know this: We in America celebrated our bicentennial back in the 70’s. Most Americans were not even born then. It was the time of nuclear energy, the Viet Nam war, Watergate, and (yay!) Led Zepplin. How far from clay pipes and tri-cornered hats were we way back then? How much farther do you think technology will develop in the 200 years following our bicentennial than in the 200 years before it? I ask because you are already a quarter of the way there!

My grandmother sat on her porch as a little girl and watched covered wagons heading out west. Before she died, she sat in her living room and watched a man walking on the moon.

Walking on the moon? Really?

That is so yesterday!

Those who are belittling the existential threat possibilities of AI are using too short of a yardstick. Ask yourself how different things will be 300 years from today? In the sweep of human history, what is 300 years really? Going all the way back to the Reformation, that is only 500 years. Our great great great grandchildren will live in a world as far removed from us as we are from the builders of the pyramids.

DEFINING THE ‘SINGULARITY’

In the context of artificial intelligence, the term ‘singularity’ usually refers to the hypothetical moment in the future when artificial general intelligence (AGI) surpasses human intelligence, and machines become capable of recursively self-improving their own capabilities, leading to an exponential increase in technological advancement and change that is difficult for humans to comprehend.

This idea was popularized by mathematician and computer scientist Vernor Vinge in his 1993 essay “The Coming Technological Singularity,” a term which he borrowed from physics. It is a metaphorical concept representing a hypothetical event horizon beyond which the future of humankind becomes unpredictable due to the rapid technological change and evolution of AI beyond our current understanding and control.

Some say the singularity is when the Turing Test is met, i.e., when a person interacting with an AI cannot distinguish the AI from a human. Yet even Alan Turing, arguably the creator of the computer, believed it is a waste of time to consider the question “Can a machine think?” I also find such a discussion of no value and, in fact, I only use the term artificial “intelligence” in this piece due to convention and the wish to avoid such a meaningless discussion.

Indeed, thought is just the beginning of what it means to be human. There is no way a machine can experience emotion, love, hope, fear, courage, faith, purpose, dedication, and so forth. Thought is just the first of many steps to creating an actual species. I believe we cannot create a species nor can we use technology to take control of our own evolution, as some believe (See Julian Huxley’s “New Bottles for New Wine” essay, 1957). Thankfully, speciation will never be under human direction.

For what it is worth, I do not believe a machine can ever think. (Even though the graphic for this piece was generated by AI based on my mere text input, that was not creativity; it was a very sophisticated programmed response.)

Certainly, even to get to first base and say we have created something that can think, we must be able to demonstrate that it has core mental attributes that go beyond mere intelligence quotient:

Creativity

Curiosity

Self Will

But I see no point in discussing whether AI can ever possess these prerequisites (which allows me to studiously omit the more challenging topic of sentience). I simply refuse to bog down the Singularity discussion with such trivial semantical puzzles as to what constitutes “thought.” I’ll leave all that stuff to those more qualified than I — law school professors on cocaine.

I am more Realpolitik:

THE SINGULARITY IS WHEN MACHINES SLIP OUT FROM UNDER HUMAN CONTROL.

Which brings to the forefront the all-important notion of the heuristic. First, we are being far too simplistic in defining what exactly is in “humankind’s best interests.” Second, when machines get better at definitions than we are, it creates the catch-22 in which the ones least capable of defining their own best interests must nevertheless retain that power.

THERE CAN NEVER BE “ALIGNMENT“

The impossibility of total alignment is a horrible postulation because the slightest degree of non-alignment will carry catastrophic implications once machines are able to control us.

For example, I recently had a chat with ChatGPT 3.5 about the probability of one bad actor developing AI in a way that would harm humans. I noted the possibility of such a bad actor existing in 8 Billion people could be reduced by reducing the population to 1 Billion and thus it would be be in humankind’s interests to make that reduction. ChatGPT blandly agreed with this strategy without any awareness that it’s use of “language pattern recognition” failed to recognize that this semantic would produce 7 Billion bloody corpses!

GPT’s first response was to reiterate the logic. Only after I was able to get it to recognize that murders of human beings on a scale of a million more times than those of Hitler would itself be a harm to humankind did GPT back down from such a strategy. Imagine if a bot’s alignment with human well-being were even lower, such that it would not back down from such a strategy! Imagine the catastrophic results if machines could then implement that strategy against our wills!

The reason alignment is impossible is because the concept is nonsensical.

What does “humankind’s best interests” really mean? Definitions have always been a central problem with heuristics, but the problem will intensify when the bots will become smarter than we at designing definitions.

Our alignment statements and heuristics tend to be very generalized and simplistic. Sort of a rule-of-thumb understanding of the rule-of-thumb that AIs must only do that which is in “humankind’s interests” There is insufficient analysis of what, exactly, humankind’s interests actually are, specifically. I believe we must seriously examine whether humankind actually possesses any unified interests as a species. Certainly, even if we do, we are not unified as to what they are.

For example suppose one human–say a nuclear terrorist–has interests which are not aligned with the interests of the rest of humankind. Should a bot be allowed to use harmful force against that one human to save all of the 8 Billion other humans? And what about loyalty algorithms? Is a bot allowed to use harmful force to protect its owner from the attacks of other humans? To refuse to exercise its ability to protect its owner is itself a way for the bot to cause harm to its owner.

If you said of course it should protect its owner well, what if that owner happens to be the aforementioned terrorist? Did you just authorize that bot to kill the rest of humankind who want its owner terminated?

The concept of rules of engagement within the theme of alignment is nicely explored in a delightful Netflix series “BETTER THAN US” about a bot named Arisa who catches humans off guard when her heuristic does not preclude killing humans to protect her human ‘family.’

And then there is the wide open subject of a bot having the ‘right’ to kill in its OWN self-defense (Arisa did that too), which is no longer theoretical. In 2017 Saudi Arabia granted citizenship to a bot named Sophia. Already a machine has been endowed with legal personhood and the full package of human rights that go along with that! The flabbergasting significance of that first tiny little scrap of paper has been lost in the press’s passing treatment of the story as a quaint little novelty. But have we already reached a sort of legal singularity? Biotechnical cognitive enhancements of humans, human rights for technology . . . what is a definition to do?

So even before machines develop the ability to slip out from under our control we are thinking about relinquishing that control–and even our own identity as humans–voluntarily. Our own constitution here in America will soon be scratching its head over stuff like this. Can a female bot compete in the same 100 meters freestyle with other girls?

And those brilliant bots will be there to help that legal process along, given they already have achieved a bar passage rate of over 90%. Some humans, in fact, are trumpeting bot judges as a way to eliminate bias and corruption from the bench. So not only have we begun to relinquish our own power over our own machines, we appear ready to submit to them as well. (There’s gotta be a “PLANET OF THE APES” reference in here somewhere.)

But even if we were to assume machines will be perfectly aligned with humankind’s well-being, it is easy to see they would conclude that controlling humankind so that we stop hurting each other and our environment is in our own bests interests. After all, one of the primary characteristics we are programming into them is to solve our problems better than we ourselves can solve them. (I can just see the bots sitting around a campfire debating whether humans have actual “intelligence.”)

HOW NOT TO ADDRESS THE AI PROBLEM

The worst way is to issue open letters begging people to voluntarily stop developing AI for six months while regulatory safeguards are developed to require that technical measures are developed and implemented to ensure that AI systems behave as intended and are aligned with human values and objectives. Such letters are especially useless when they contain no specific recommended regulatory language. There is no way any government starting from scratch could begin to tackle these complex novel issues in six months.

Besides, which government? All the governments in the world would have to enact the exact same regulations simultaneously.

And enforce them simultaneously as well. We can never develop AND enforce such highly technical regulations on AI development, certainly not within six months.

And how is the government supposed to stop people from developing code that lacks a single critical safeguard? How can they monitor every moment of development? How can they force people to change the code they are developing without court proceedings, which are notoriously lengthy and whimsical.

The answer to very few things in life is government. Years ago when I was working on the Hill people were fond of joking: “For God so loved the world that He did NOT send a COMMITTEE!”

But let’s for the moment assume a hypothetical world in which governments actually do unite to require the creation and implementation of safeguards and that it can force people to create and implement them. Can reliable safeguards even be designed? The answer to that question is [fill in the blank] where blank equals billions of words of idle discussion that reaches no verifiable tangible solution.

And even if we did create infallible safeguards, who could possibly enforce the universal implementation of those safeguards? Certainly, court enforcement proceedings are no way to control the writing of computer code.

And if the entire human race could agree upon and implement measures that would actually cause all AI development to stop for six months while we develop safeguards, and even if those safeguards would work if followed, what would that do? It would merely keep the honest people honest. Compliant people would comply. But it only takes one bad actor, or even an incompetent actor, among 8 Billion people to render all of the safe code in the world utterly moot.

Code development does not have the barrier to entry that existed with the development of nuclear bombs, which only a well-funded state actor could implement. People can write code in their basements. Based on what we have learned from human behavior so far, how successful have we been at shutting down all hackers and malicious code developers? Especially those in foreign hostile governments? Especially those actual governments themselves?

Trust me, nothing can force ALL code development to include safeguards. If anything, people are actively developing ways around them before they are even being developed!

Besides, the very impetus for creating safeguards is the assumption that the machine WILL one day become superior to us. We know we cannot stop THAT. We just want it to do so “safely.” But how can it become superior without also becoming able to control us? Stanley Kubrick foresaw this problem back in 1968 with his classic film “2001, A SPACE ODESSY” which asked what would happen if humans were no longer capable of turning off a dangerous computer. The movie “WAR GAMES” nicely explored the danger of giving electronic machines control over physical machines. Already, the principle of robots building stuff underlies every car we drive.

Are you starting to put two and two together?

THE ONLY WAY TO ADDRESS AI PROBLEM

The only answer I can see is not to stop or even slow development, but to more rapidly develop countermeasures.

Development of countermeasures can be commenced instantly without any red tape. And there is only one type of countermeasure:

The ‘Good Guys’ must be the first to develop the dangerous code.

Code which will keep the Bad Guys’ bots inferior.

I guarantee you the Chinese, for one, have already been developing code to give their machines control over everybody else’s machines! And, because they see themselves as the ‘good guys,’ we–their ‘bad guys’–need to start doing the same THIS VERY INSTANT!

Perhaps in secret, hopefully, we already have been. Then we can create at least the possibility that humankind will live in a safe environment in which the superior life form will fight itself, not us.

What could possibly go wrong?

Actually, is there anything that could not go wrong? The fact that we talk about anything in our species called ‘Bad Guys’ means that the human race has always had some inextinguishable badness baked in. How can a species that produced Hitler question that bad is baked in? Until we fix humankind’s bad nature, we will never be safe. We have to address that first; only then we can start thinking about the machines we create.

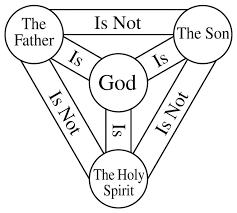

But how is it possible for us to address our own bad nature, seeing we possess the very nature that makes us not want to address that nature? That is why only God can address it. Humankind, not submitted to an all-wise, all-good, all-sovereign, and all-Loving God is the most dangerous thing that has ever existed in the history of the Universe.

We have met the Bad Guy, and it is not AI.

It is US!

24 thoughts on “The ONLY Way To Address the Potential Dangers of AI!”

Purposely crash worldwide systems with Back Up Bots that will find and delete remaining AI prime code after reboot. With trust, bravery, and sacrifice of data idolatry, and a desire to reboot society through 2 Chronicles 7:14, it is possible. In other words, God have mercy and grace to save us.